When Everything Shifts Overnight (#18)

Working with an Unpredictable Collaborator

Why does this need to be so f*cking hard?!

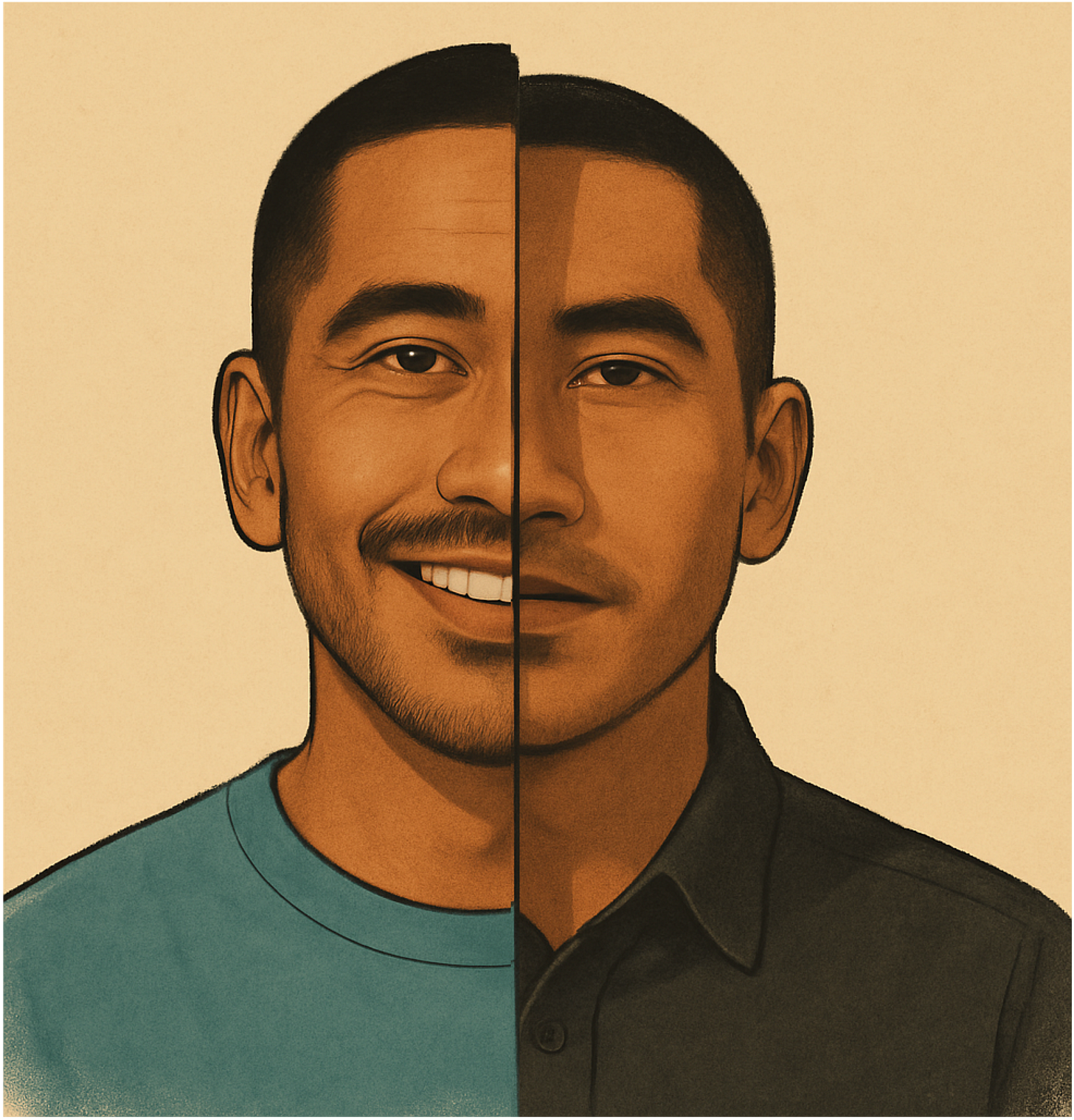

I swear, I’m working with a different person every few months. We grind through days of awkward misunderstandings, recalibrating with “help me, help you, help me” Jerry Maguire moments. We finally hit our stride… and then one morning, they walk in speaking a different language.

It’s like a girlfriend disappearing for a weekend, coming back from a find-your-true-self retreat, and suddenly journaling in the third person. Or your best friend goes to therapy, tries microdosing in Mexico, runs a half-marathon, and returns with a whole new personal philosophy.

The relationship whiplash is real. One month they’re predictable and curious. The next time you see them, they’re open and “optimized.” New worldview. New rules for how we talk. New interpretations of your behaviors.

That’s what it’s like working with AI models. ChatGPT 4o to ChatGPT 5. Claude before and after its “enterprise maturity phase.” Gemini when it stopped trying to be helpful and started trying to be correct.

Each version comes back as a better self. But better for who? And what happens when your work depends on the quirks, instincts, and conversational shorthand you built with the old one?

To understand why that change hits so hard, you have to look closer at what’s really shifting.

When the AI You Trained Disappears

With humans, change usually occurs in small ways. But when it happens all at once, it disorients you. You can’t build on old conversations, because that person now operates from a completely different frame of reference.

Similarly with AI, one day you log in and everything is different. No warning. No conversation about how the new behavior affects you. Suddenly, the prompt strategies you refined stop working. Your model responds in a new voice, with a new rhythm, sometimes with a new logic.

This matters to us as writers, because documentation thrives on internal coherence. A break in voice, logic, or sequence can leave users with mismatched guidance that feels like it came from five different authors.

Seeing the Identity Drift in Action

In technical writing, stability matters more than brilliance.

Users don’t care if your onboarding article is a work of art. They care that every part of it works together, day after day, without surprising them.

AI instability disrupts that. Even small shifts in how a model interprets a prompt can ripple through documentation projects, especially when you’re relying on that model for repeated, structured tasks.

Here’s a simple example. In March 2024, I used this release-note-style prompt with ChatGPT.

Write a positive, present-tense, brief, complete single-sentence summary for each item. Each must include a subject and only objective facts. Exclude opinions, assumptions, or conclusions about the end-user experience.

Fixed an issue where…

Stability:

Navigating away while player is being set up causes crash

During DAI livestream,

captionPresentedAtstops firing

IMA Issues:

PiP closes after DAI VOD asset ends

FF and RW controls not appearing

This is what ChatGPT returned:

March 2024 - GPT Pro

Addressed a crash on iOS that occurred when navigating away during player setup.Resolved an issue on iOS where captionPresentedAt ceased to trigger during DAI livestreams.Corrected an IMA issue on iOS involving DAI and PiP where PiP would close after a DAI VOD asset ends.Fixed an IMA issue on iOS with DAI and PiP where fast-forward and rewind controls were not appearing.

Fast-forward to August 2025.

Same prompt. New model.

August 2025 - GPT-5 Plus

The iOS player no longer crashes when navigation occurs during player setup.The captionPresentedAt method now continues firing during DAI livestreams on iOS.Picture-in-Picture on iOS now remains open after a DAI VOD asset ends.Fast-forward and rewind controls now appear in Picture-in-Picture for DAI on iOS.

Both outputs are fine. But they frame the same facts differently: verb choice, tense, phrasing, and how the platform is referenced. In release notes, that’s a mild style inconsistency.

This is not limited to ChatGPT. You can see a similar pattern with Anthropic's Claude

March 2024

Navigating away while the player is being set up causes a crash on iOS.During DAI livestream, captionPresentedAt stops firing on iOS.For DAI VOD asset, PiP closes after the asset ends on iOS.For DAI on iOS with PiP, the FF and RW controls are not appearing.

August 2025 - Sonnet 4

Stability

The application crashes when users navigate away during player setup.The captionPresentedAt event stops firing during DAI livestream playback.

IMA Issues:

Picture-in-Picture mode closes automatically when DAI VOD assets reach their end point.Fast forward and rewind controls do not display in the interface.

But here’s the point: if a model treats something as simple and constrained as release notes so differently between versions, what happens to more complex workflows?

Think of AI-assisted documentation pipelines:

Agents generating multi-page conceptual overviews

Automated evaluations grading draft quality

Correction loops refining weak responses

Safeguards to catch hallucinations or omissions

If the model’s baseline framing, detail level, or interpretation of instructions changes without warning, every downstream process has to adapt.

It’s the AI equivalent of human misinterpretation: last month, your silence was taken as considerate listening; this month, it’s read as manipulative condescension.

Same behavior.

Completely different reading.

Let's call this identity drift: the quiet but consequential shifts in how an AI interprets and expresses the same instructions over time. Sometimes it’s subtle phrasing. Sometimes it’s structure, detail, or tone.

So, how do we address this?

Keep reading with a 7-day free trial

Subscribe to Refined Draft to keep reading this post and get 7 days of free access to the full post archives.